Introducing llm model training services for you and implementing rag

Data Preparation:

- Gather and clean relevant dataset

- Format data to align with LLaMA's input requirements

- Divide data into training and validation sets

Environment Setup:

- Install essential libraries such as transformers and accelerate

- Configure GPU environment with CUDA if needed

- Download pre-trained LLaMA model

Model Configuration:

- Select appropriate model size (7B, 13B, etc.)

- Define hyperparameters including learning rate and batch size

- Specify training parameters like epochs and gradient accumulation

Fine-Tuning Process:

- Apply efficient fine-tuning techniques like LoRA and QLoRA

- Train model using prepared dataset

- Monitor training progress and metrics

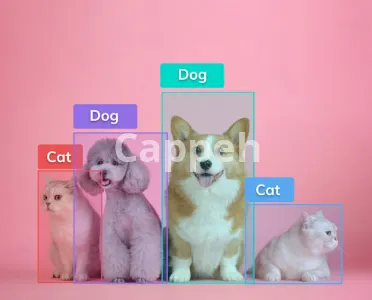

Evaluation:

- Evaluate model performance on validation set

- Compare results with baseline metrics

- Conduct qualitative analysis of model outputs

Iteration and Optimization:

- Adjust hyperparameters based on outcomes

- Experiment with various fine-tuning methods

- Refine dataset if needed

Model Deployment:

- Export fine-tuned model

- Optimize for inference, including quantization if required

- Prepare model for deployment environment

| Shop Location | Auckland, New Zealand |

No reviews found!

No comments found for this product. Be the first to comment!